Solutions & Applications

Total AI Solution

AI applications are everywhere and it has made significant strides. Plenty of fancy, dazzling products and applications are changing the world. Whether it is in healthcare, fintech, or retail, you can see AI playing an important role in these industries. On the other hand, products labeled AI are impacting the world; AI cellphones, AI PCs, AI pins, Rabbit R1, etc., they are all driven by AI engines.

How to unleash these AI products’ power? The secrets are LLM (Large Language Model) and LAM (Large Action Model). Without LLM and LAM, AI products cannot deliver AI magic to end users. These models are trained using powerful AI servers, which are typically located in AI data centers.

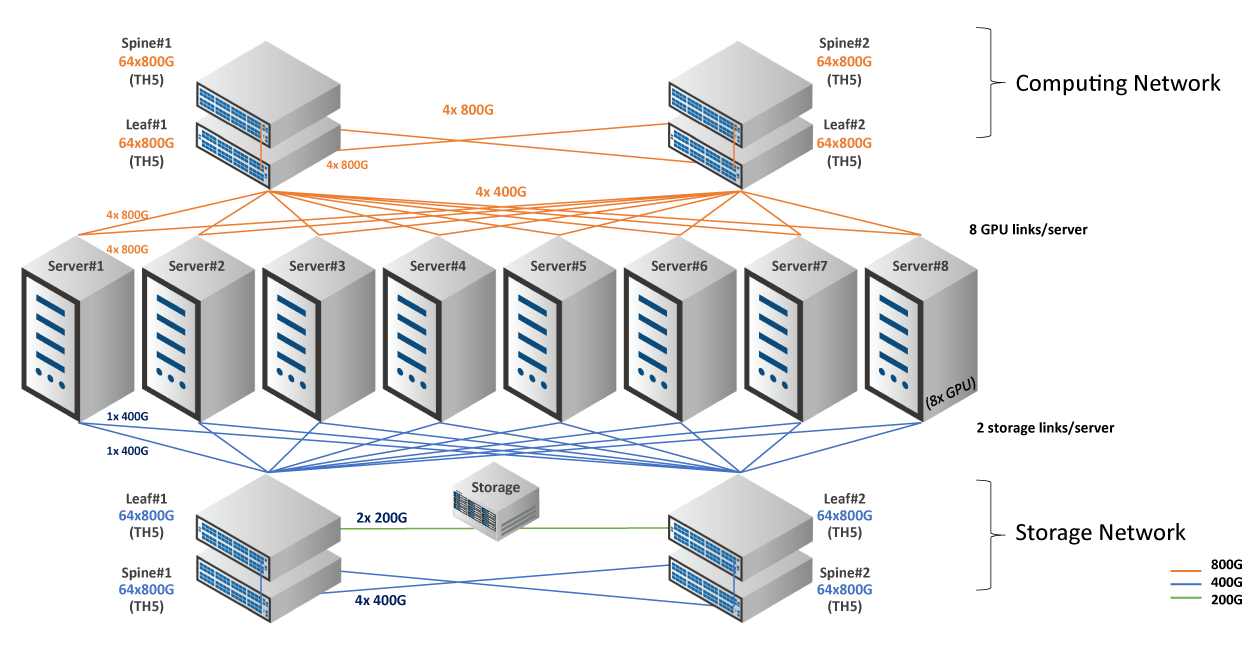

An AI data center is comprised of AI servers, high-capacity switches, transceivers, and cables, none of which are dispensable.

AI traffic has the following characteristics.

Moving from a traditional network to an AI-based network is an evolution that changes the way the network works. In AI/ML clusters, job completion time (JCT) is the most important metric.

How To Enhance Job Completion Time

RoCE (RDMA over Converged Ethernet) plays an important role when we talk about AI networks. Since the lossless function in AI networks is important, RoCE controls the transmission rate and traffic congestion.

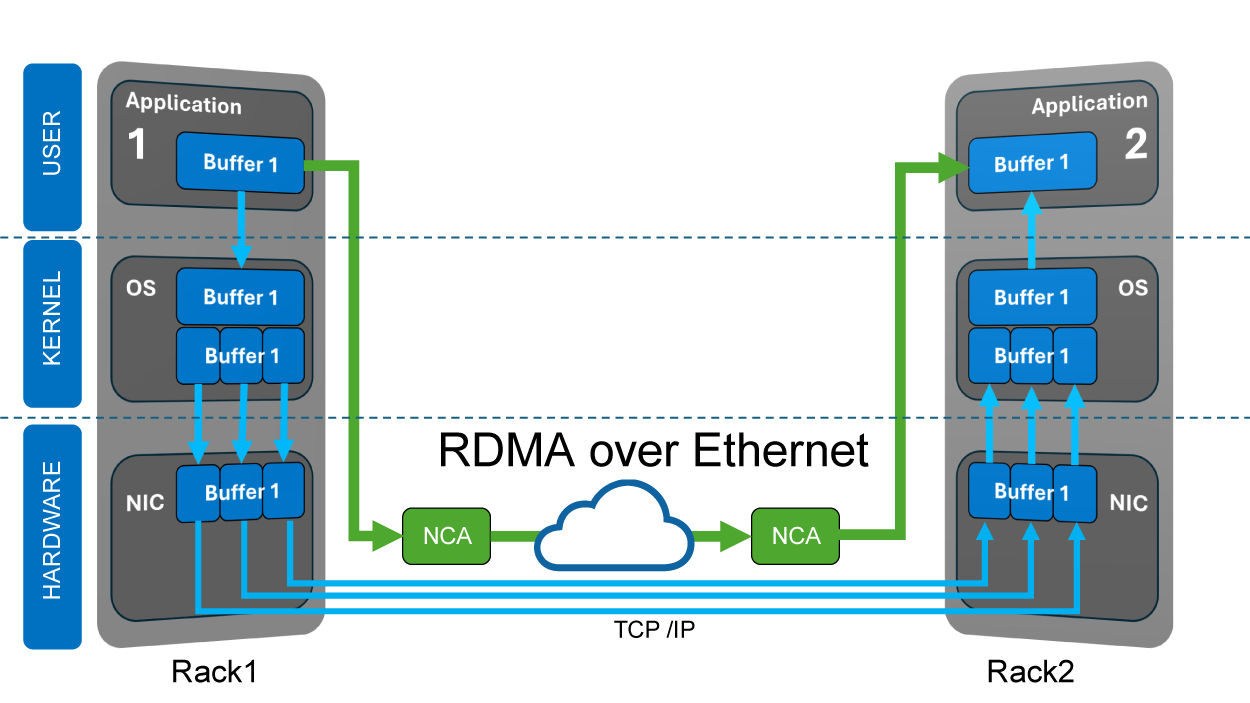

What is RDMA (Remote Direct Memory Access)? RDMA is a technology that enables transferring data between memory and peripheral devices or from memory to memory, without involving CPU or OS resources.When RDMA over Ethernet is implemented, it means the sender directly accesses the NIC card memory from the application and the NIC driver uses the buffer of the UDP stack to reduce the processing overhead, reducing latency and achieving higher throughput.

PFC (Priority-based Flow Control) and ECN (Explicit Congestion Notification) in the network achieves lossless transmission and congestion avoidance. The combination of PFC and ECN is DCQCN (Data Center Quantized Congestion Notification), which is a high-performance congestion management solution for AI Networks. DCQCN is the most widely used congestion control algorithm in RoCEv2 networks.

GPUs (Graphic Processing Unit) were initially designed to accelerate computer graphics and the processing of pixels for images. These days, scientists have found that GPUs excel in data science and image analysis, they are also applied in a wider range, including AI and ML (Machine Learning), and this is why the GPU is now the so-called GPGPU (General-Purpose computing on Graphics Processing Unit). When GPUs co-work with CPUs, they can accelerate the workflow and unleash the power of AI.

Edgecore offers an AI-ready switch NOS, SONiC, which is supported across a broad-spectrum of switching platforms, providing a Switch Abstraction Interface (SAI) ranging from 1G to 800G, covering leaf to super-spine layer switches.

With the versatile SONiC, Edgecore switches support telemetry monitoring and management, optimized throughput through fine-tuned lossless networking and load balancing. All these features are designed for reducing congestion, precisely locating congestion points, optimizing load distribution and JCT.

In addition, Edgecore provides end-to-end services and support for SONiC at different stages, including presales and post sales services.

Solution Key Benefits

Why Edgecore AI solutions

A well-designed AI data center should consider GPU architecture, GPU features, total cost, and power consumption. Edgecore AI solutions are optimized for high performance and efficiency, excelling in heavy loading environments.

Edgecore cutting-edge AI/ML switches and servers are powered by world top-notch chipset vendors and are designed by our experienced engineers that can maximize the compute efficacy. Also, Edgecore provides plug-and-play transceivers and cables, fully qualified with Edgecore switches through solid validation. You are worry-free about compatibility.

Meanwhile, we embrace open architecture, proactively involve different projects, then deliver complete, flexible, and future-ready solutions for your AI/ML connectivity needs.

Edgecore always runs ahead of the market. From edge to core, from switch to server, now Edgecore serves customers with what they need to build an AI data center, especially suitable for deep learning, training, and inference.

Accelerate your AI network,now!

Related Products

The Edgecore AGS8200 is a high performance, scalable GPU-based server suitable for AI (Artificial Intelligence)/ ML (Machine Learning) applications. The server is ideal for training large language models, automation, object classification and recognition use cases.

▌800G QSFP112-DD-2xDR4 500m ▌SMF ▌Wavelength(nm): 1310 ▌Reach: 500m

▌800G QSFP112-DD 2xFR4 2km ▌SMF ▌Wavelength(nm): 1310 ▌Reach: 2km

▌800G OSFP SR8 50m ▌OM4 ▌Wavelength(nm): 850 ▌Reach: 50m

▌800G OSFP 2xSR4 50m ▌OM4 ▌Wavelength(nm): 850 ▌Reach: 50m

▌800G OSFP-2xFR4 2km ▌SMF ▌Wavelength(nm): 1310 CWDM ▌Reach: 2km

▌800G OSFP-2xDR4 500m ▌SMF ▌Wavelength(nm): 1310 ▌Reach: 500m