I lived through the early days of SDN,

Where a dozen or more VC-backed startups hit the scene with their own spin on a network operating system. The common trait: the O/S that each startup created would be loaded on industry-standard open switches which were based on merchant silicon. Not surprisingly, Edgecore was one of the main choices for the open hardware platform needed to run each of those operating systems since it has been providing open solutions for a very long time.

It was the wild west of open, and early adopters of this disaggregated approach were demonstrating with their dollars that the disaggregation of hardware and software was interesting. They were spending real money to create production-grade data center switching platforms that were ‘different’ and ‘better’ than the traditional and proprietary solutions already available. The operating system wars continued from 2010 to 2016 or so, and then something magical happened….

SONIC (Software for Open Networking in the Cloud) entered the landscape.

SONiC had been developed by Microsoft to power Azure during the exact same period in which the SDN players were battling for dominance. Microsoft was looking for a means to power their Azure Cloud services, and made a strategic choice to develop their own O/S based upon the workhorse Linux platform. Microsoft’s SONiC worked so well, that in 2017, they offered it to the open-source community. The rest of the story as they say, is history! And while other network operating systems still exist for specialized applications, such as TELCO, SONiC is fast becoming the dominant player in open infrastructure buildouts for the data center and AI Inferencing.

The SONiC project is currently and managed by the Linux Foundation and has more than 5000 active community members representing more than 500 companies. It is designed for high performance and AI data center deployments and offers a flexible, scalable, and lower-TCO networking solution, making it particularly beneficial for the latest generation of GPU-centric infrastructures with demanding and evolving needs.

So why is SONiC so important for an AI data center deployment?

The value of SONIC in AI Data Centers:

1. High-Performance Networking Fabric: AI workloads, especially distributed training across multiple GPUs, require high-bandwidth, low-latency, and lossless network fabrics. SONiC supports features like Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE), which is crucial for efficient GPU-to-GPU communication and memory access, minimizing bottlenecks and maximizing training speed.

2. Scalability: AI data centers often need to scale rapidly to accommodate growing datasets and more complex models. SONiC’s modular architecture and support for a wide range of hardware platforms allow for seamless scaling of the network infrastructure without being locked into a single vendor’s ecosystem.

3. Flexibility and Customization: The open-source nature of SONiC allows network operators to customize the operating system to meet the specific requirements of their AI infrastructure. They can integrate specialized protocols, automation tools, and monitoring systems tailored for AI workloads.

4. Vendor Neutrality: SONiC decouples the network operating system from the underlying hardware. This disaggregation allows AI data centers to choose the best-of-breed hardware from various vendors, avoiding vendor lock-in and potentially reducing capital expenditure. Organizations can select hardware based on performance, cost, and specific features relevant to AI acceleration (like specific ASIC capabilities).

5. Automation and Orchestration: Managing the large-scale network infrastructure in an AI data center requires robust automation. SONiC supports open and standardized APIs (like REST APIs) and integrates well with various automation and orchestration tools, enabling efficient provisioning, configuration, monitoring, and management of the network. This is crucial for handling the dynamic nature of AI workloads and infrastructure.

6. Network Visibility and Diagnostics: Real-time monitoring and diagnostics are essential for maintaining the performance and stability of the AI network. SONiC provides a unified interface for telemetry and diagnostics across different hardware vendors, simplifying troubleshooting and performance optimization.

7. Integration with AI Infrastructure: SONiC can be integrated with other components of the AI infrastructure, such as SmartNICs (using initiatives like DASH – Disaggregated APIs for SONiC Hosts), to further enhance performance and offload network processing tasks from the CPUs, freeing them up for AI computations.

The Benefits of SONIC for AI Data Centers:

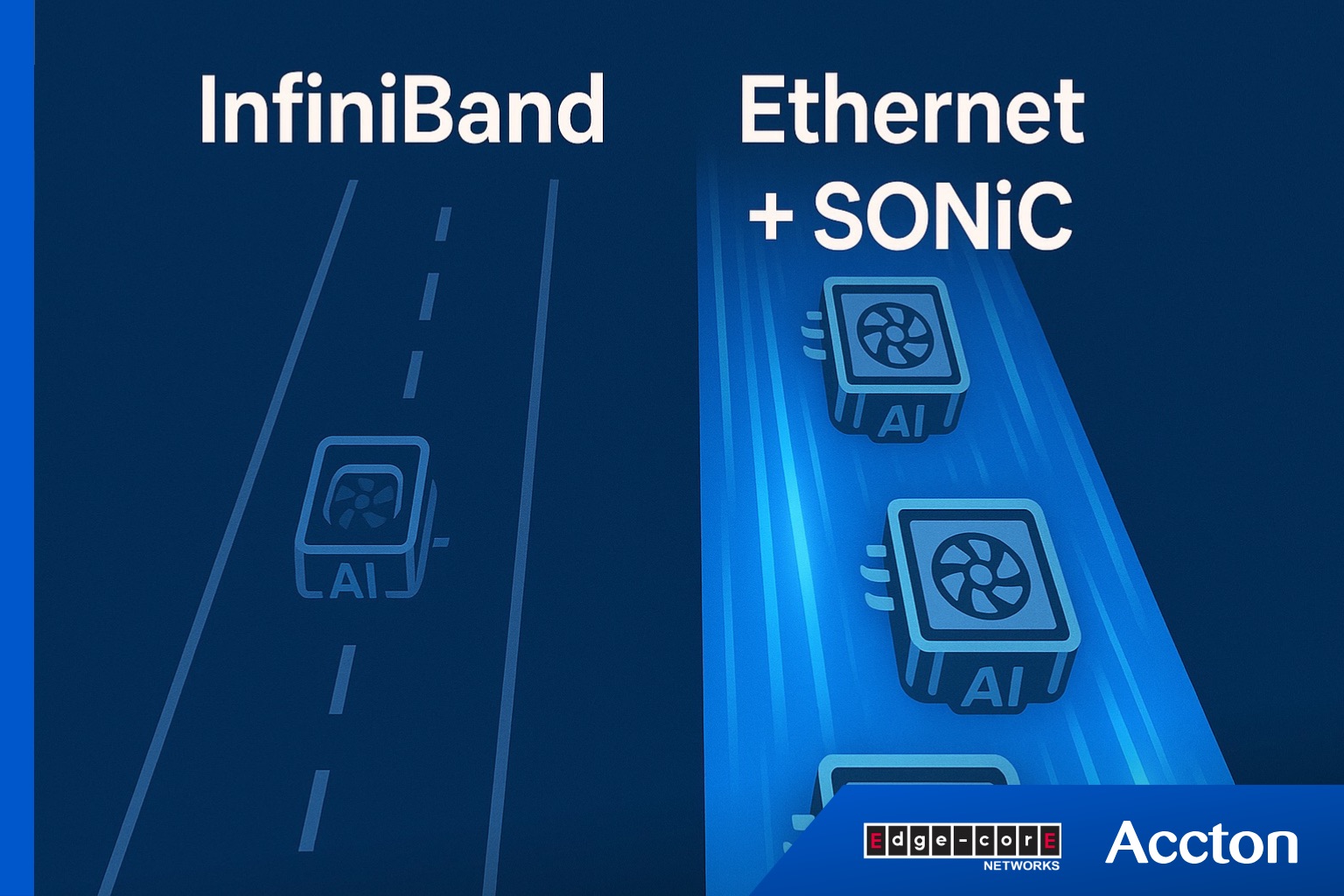

• Lower-TCO: By breaking free from proprietary systems and leveraging today very capable merchant silicon, AI data centers can significantly reduce both upfront capital costs (CAPEX) on hardware and ongoing operational costs (OPEX) related to licensing and vendor lock-in. In fact, analyst firm Dell’Oro has published trending data that shows that Ethernet will overtake InfiniBand use in AI data centers within the next 24 months, and IDC has indicated that SONiC is the fastest growing open network operating system for open switches. Ethernet and SONiC can deliver the required connectivity with a lower TCO than today’s extremely limited marketplace of Infiniband offerings.

• Increased Innovation and Agility: The open-source community drives rapid innovation within SONiC. AI data centers can benefit from faster adoption of new networking technologies and contribute to the development of features specifically needed for AI workloads. The modular architecture allows for quicker deployment of new services and features without disrupting the entire network.

• Enhanced Control and Flexibility: Organizations gain greater control over their network infrastructure, enabling them to tailor and optimize it precisely for the demands of AI applications. They are not limited by the features and roadmaps of a single vendor.

• Improved Reliability and Uptime: Features like containerized components in SONiC allow for in-service upgrades and fault isolation, minimizing downtime which is critical for uninterrupted AI training and inference tasks. Support for active-active Top-of-Rack (ToR) configurations enhances network resilience.

• Strong Community Support: The large and active SONiC community, including major players in the AI and networking space (like Microsoft, NVIDIA, Broadcom, and others), provides extensive support, documentation, and a wealth of expertise, making it easier to deploy and manage SONiC in complex AI environments.

The Net Result:

AI data centers are trending towards Ethernet switching, Ethernet switching is trending towards the use of open switches that include open silicon, and open switches are trending towards the use of SONiC to provide value. Why? SONIC provides a modern, adaptable, and cost-effective networking foundation that is well-suited to handle the unique and demanding requirements of AI Inferencing (high performance and low latency, RDMA, congestion control, etc). Open infrastructure based upon SONiC enables the utilization of high-cost resources (like GPUs) to be dramatically increased, and training and inference job times to be reduced. SONiC for AI enables greater control, maximum flexibility, and enviable AI Inference workload performance.

You may also like

🔹 Visit Edgecore to learn more about SONiC 👉 https://www.edge-core.com/sonic/

🔹 Stay connected with us 👉 https://www.edge-core.com/subscribe-newsletter/

🔹 Contact us 👉 https://www.edge-core.com/Contact-inquiry/

If you have any comments, inquiries or questions concerning our products & services, please complete the following form.

RECENT BLOGS

December 10, 2025

November 11, 2025