Nexvec™

Nexvec™

A Turnkey Open Infrastructure Solution for Enterprise AI

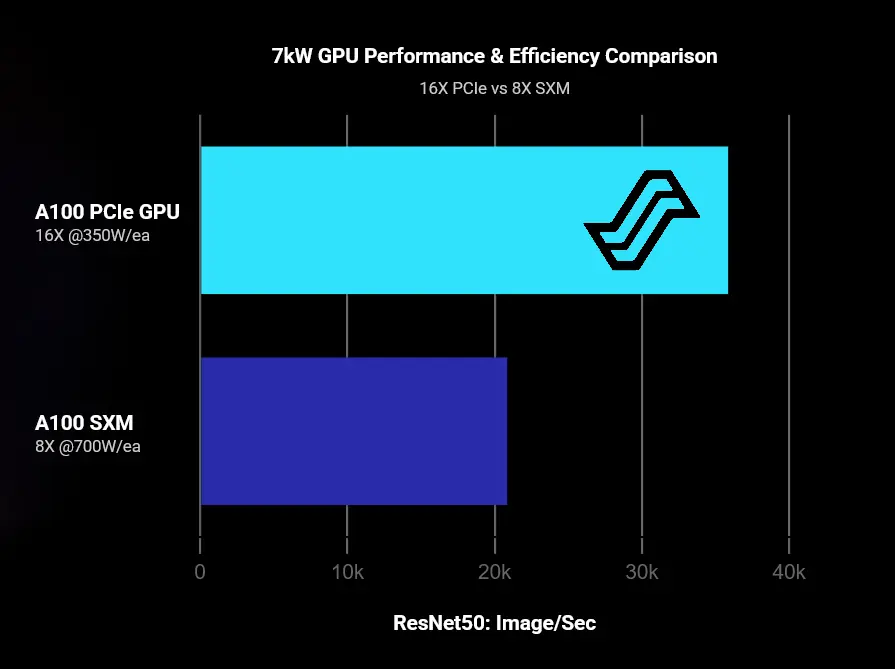

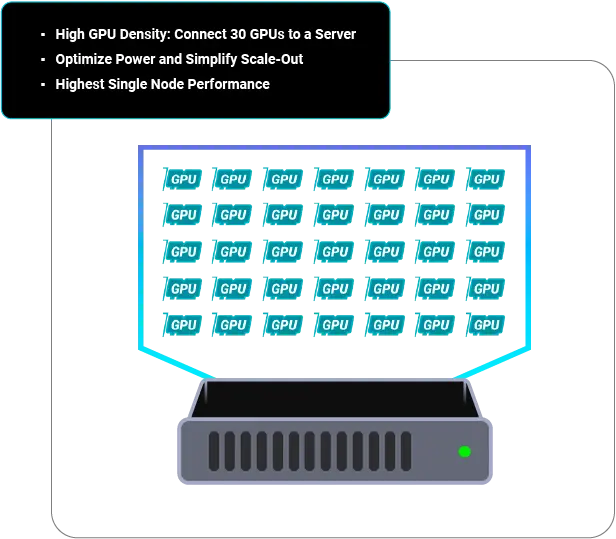

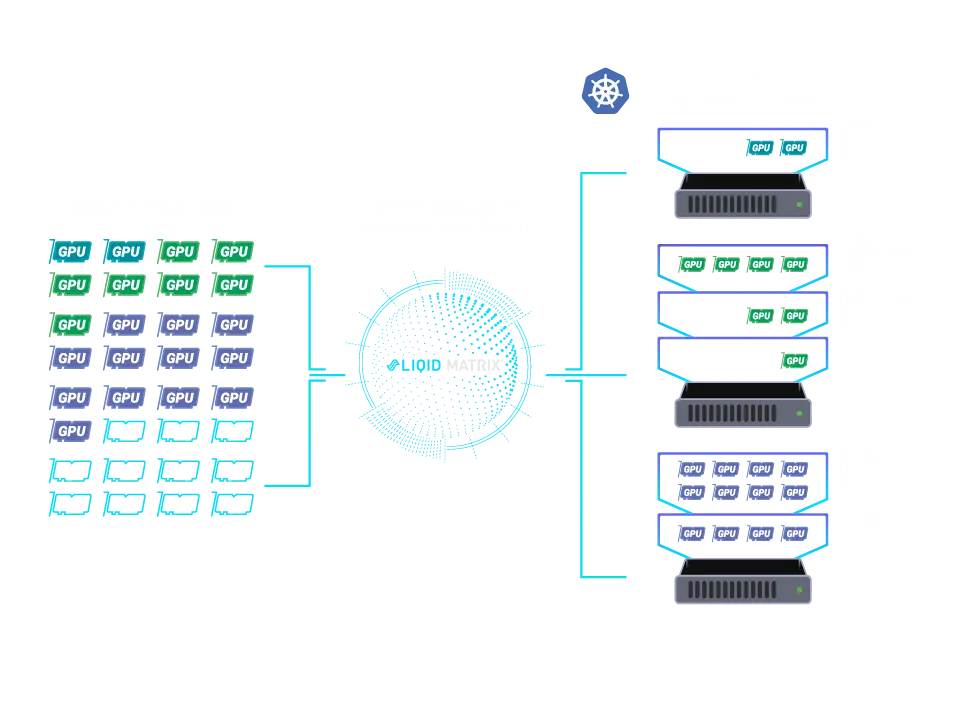

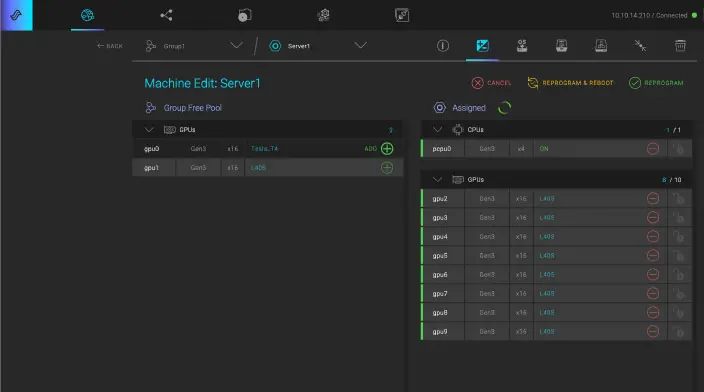

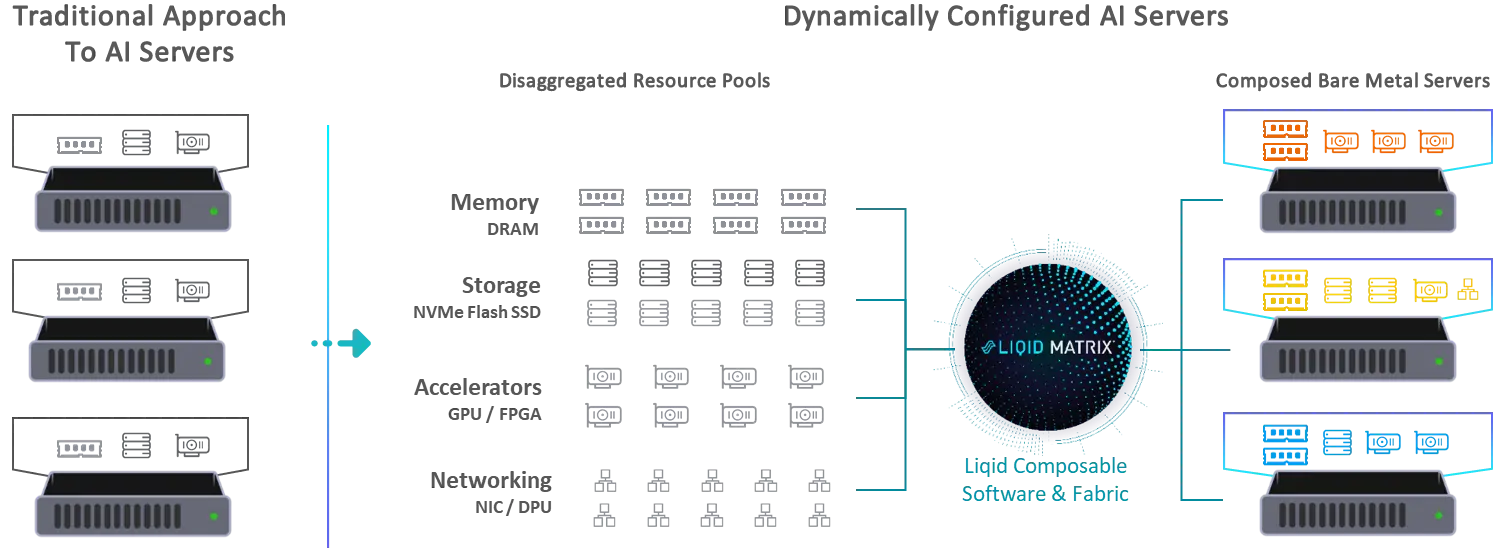

Composable Compute

In partnership with Liqid, Edgecore’s composable infrastructure solution utilizes industry-standard data center components to create a flexible, scalable architecture—built from pools of disaggregated resources.

Related Resource