Nexvec™

Nexvec™

面向企业 AI 的交钥匙开放式基础设施解决方案

可组合计算

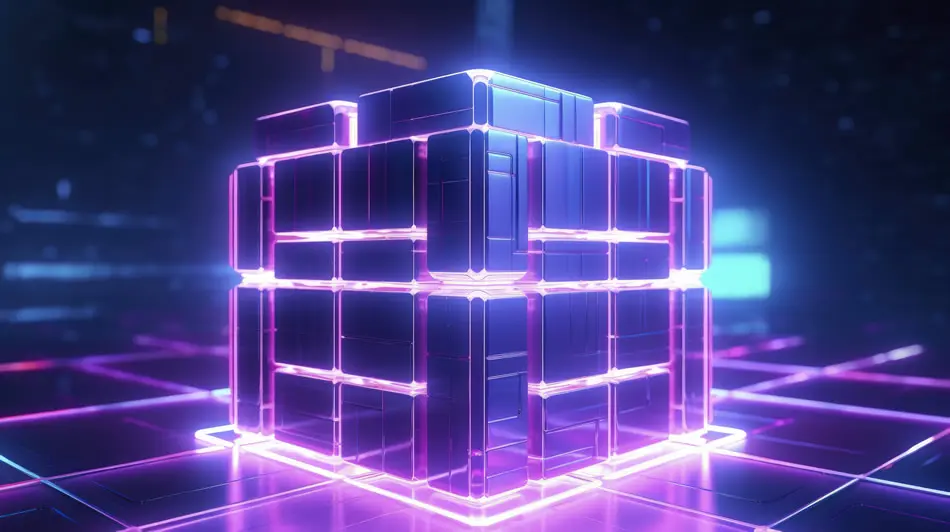

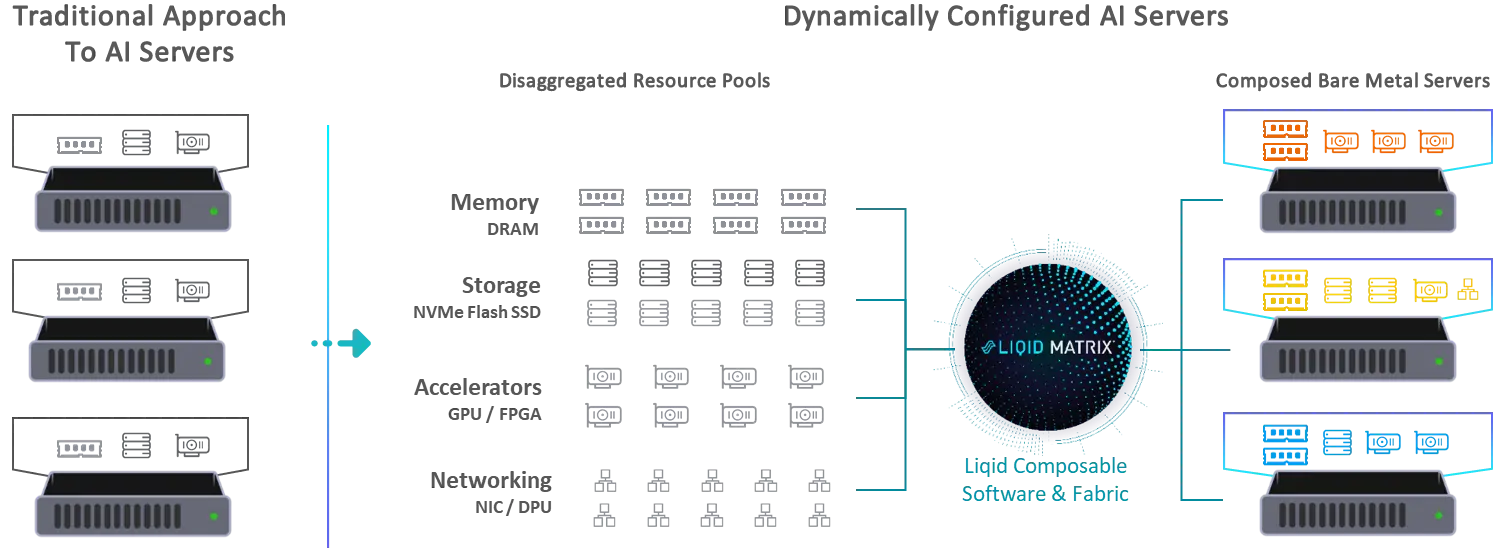

Edgecore 与 Liqid 合作,其可组合基础设施解决方案利用行业标准数据中心组件创建灵活、可扩展的架构——由分解的资源池构建。

相关资源

面向企业 AI 的交钥匙开放式基础设施解决方案

可组合计算

Edgecore 与 Liqid 合作,其可组合基础设施解决方案利用行业标准数据中心组件创建灵活、可扩展的架构——由分解的资源池构建。

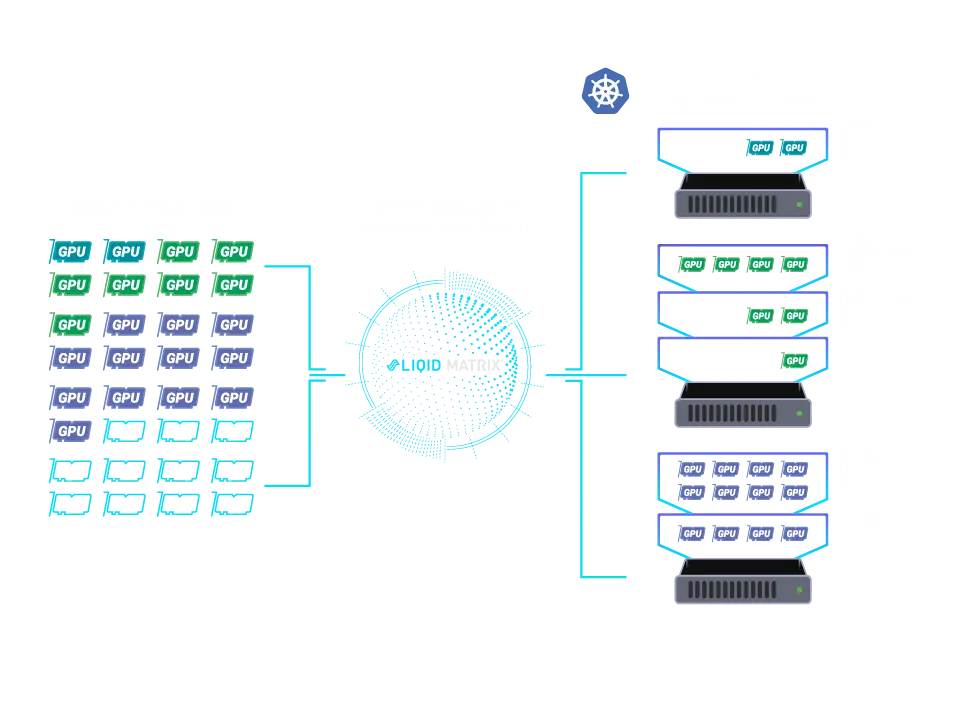

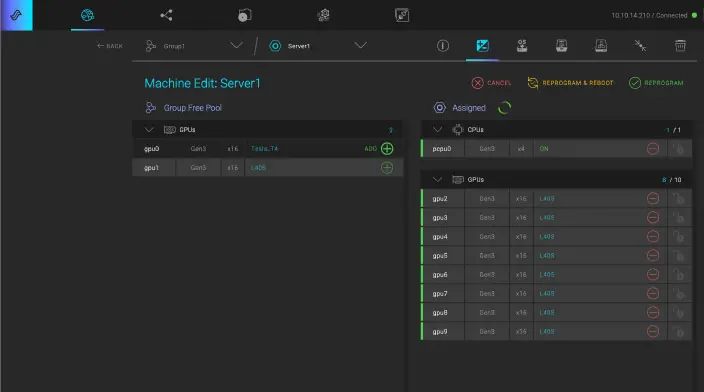

按需动态资源分配

计算、网络、存储、GPU、FPGA 和英特尔® 傲腾™ 内存通过智能结构互连,从而实现动态可配置的裸机服务器。每台服务器都经过精确定制,只配备应用程序所需的物理资源——不多不少。

提高效率,减少浪费

通过根据需要分解和重新分配硬件,您可以 资源利用率增加一倍甚至三倍,显著降低功耗并减少碳足迹 - 对于以人工智能为中心的部署尤其有价值。

采用液体矩阵技术

Edgecore 的可组合基础设施由 液体基质使基础设施能够实时适应工作负载需求。充分利用资源,同时提高可扩展性和响应能力。

下一代工作负载的自动化

基础设施流程可以完全自动化,释放新的效率,以满足下一代应用程序的数据需求——人工智能、物联网、DevOps、云、边缘计算以及支持 NVMe-over-Fabric(NVMe-oF) 和 GPU-over-Fabric(GPU-oF) 技术。

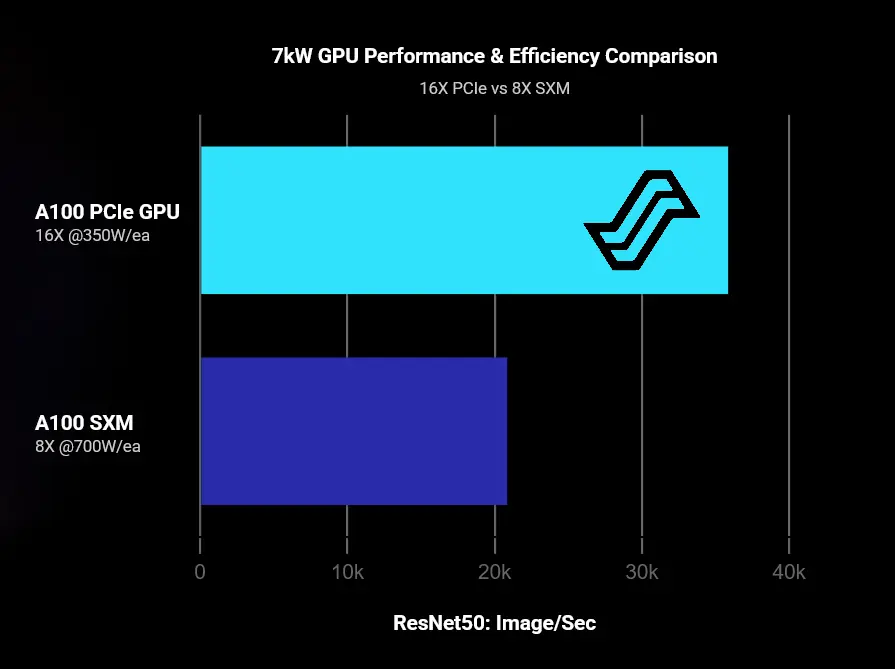

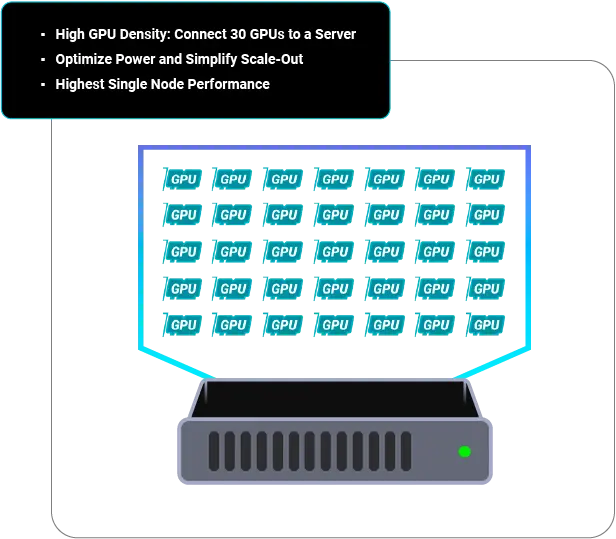

最高AI性能

优化效率

最大的灵活性

降低功耗

你的 AI,你的选择。利用硅片多样性的力量,实现无与伦比的灵活性和敏捷性

相关资源

今天就联系我们,让我们开始从头开始改造您的业务。

台湾新竹科学园区研新三路1号 30077

电话:+886-3-5638888