Is your network the bottleneck in your AI/ML cluster?

As data centers rapidly scale to accommodate distributed neural networks, traditional networking architectures often fail to meet the rigorous demands of "elephant flows" and parallel computing. The difference between a stalled training job and a successful deployment often lies in the efficiency of your transport protocol.

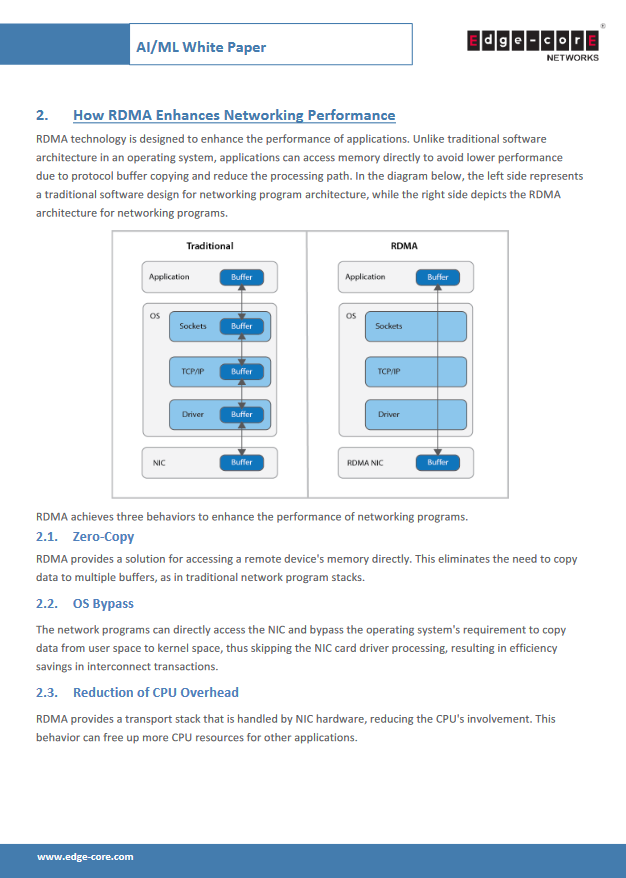

This technical whitepaper, "AI/ML Networking Background," provides a comprehensive guide to optimizing high-performance computing environments using RDMA over Converged Ethernet (RoCEv2). Unlike traditional TCP/IP stacks that burden the CPU with excessive buffer copying, RDMA technology leverages "Zero-Copy" and "OS Bypass" capabilities to allow direct memory access, significantly reducing latency and CPU overhead.

Inside, you will discover:

- The RoCEv2 Advantage: Why RoCEv2 offers a more scalable, cost-effective alternative to InfiniBand without sacrificing routability or performance.

- Achieving Lossless Ethernet: A deep dive into configuring Priority Flow Control (PFC) to prevent packet loss during bursts.

- Congestion Management: How to implement Explicit Congestion Notification (ECN) alongside PFC to dynamically throttle transmission speeds before bottlenecks occur.

Download the full report to learn how to tune your network for the high throughput and low latency required by modern AI workloads.