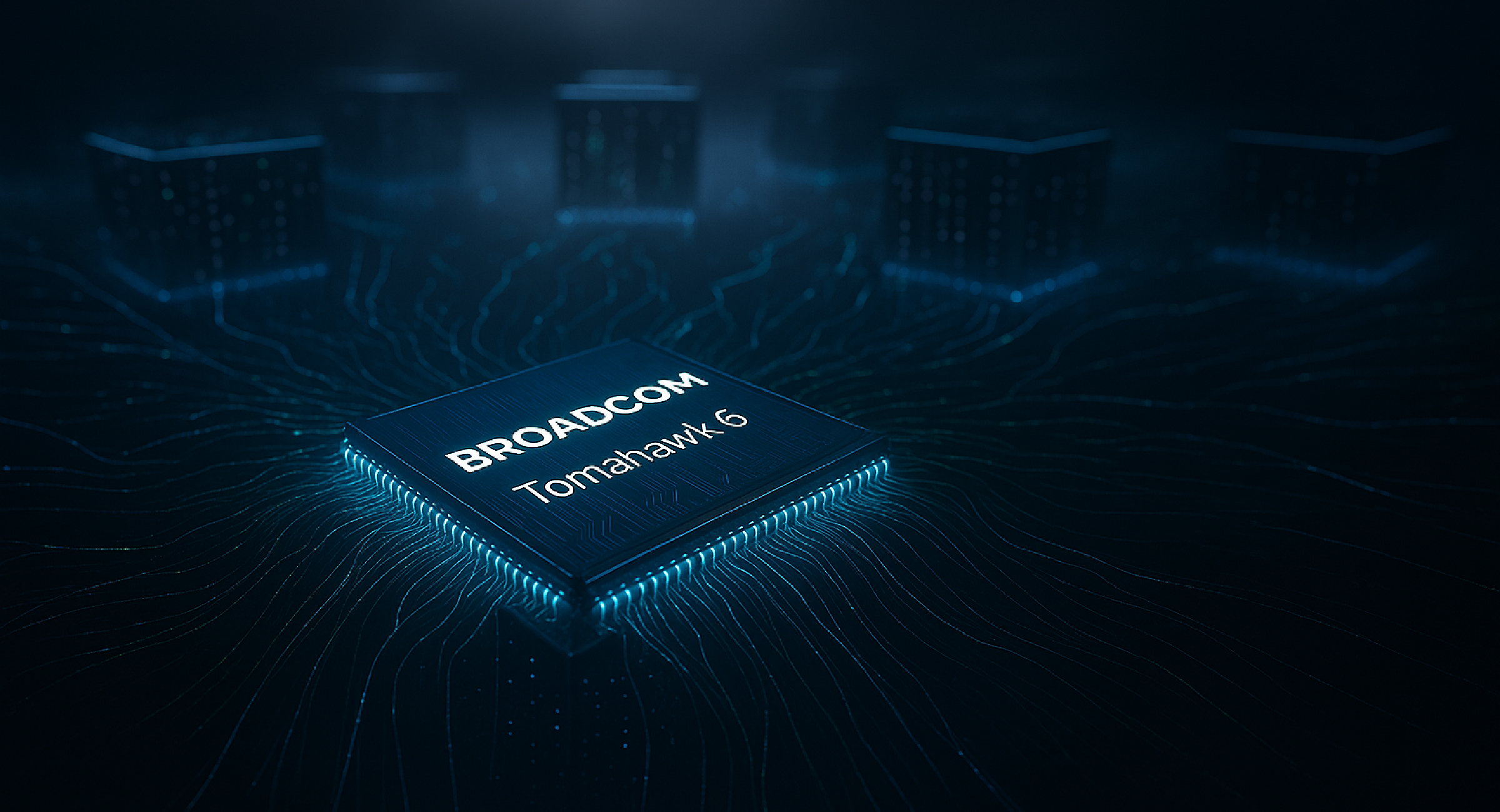

Я работаю в сфере сетевой коммутации всю свою карьеру, начавшуюся в DEC. Я был частью команды, которая познакомила мир с Ethernet, и помню, как его скорость в 10 Мбит/с казалась молнией по сравнению с выделенными линиями 56K и «точка-точка» «T1», к которым мы все привыкли. И хотя Ethernet звучал потрясающе для всех, с кем мы о нём говорили, основные требования к его развертыванию всё ещё разрабатывались. Например, как использовать витую пару для передачи данных по Ethernet, изначально разработанному для коаксиального кабеля, и как создавать прозрачные, экономичные коммутационные элементы (вместо повторителей), способные значительно увеличить дальность и масштабируемость технологии локальных сетей. Поэтому я улыбаюсь, когда думаю о том, как далеко мы продвинулись, и слышу о новых чипсетах, таких как новейший Broadcom Tomahawk 6.

Линейка ‘коммерческих’ микросхем Broadcom уже давно ассоциируется с высокопроизводительной сетевой коммутацией, формируя основу центров обработки данных и гипермасштабных сред по всему миру. С каждым новым поколением коммерческих микросхем Broadcom расширяет границы пропускной способности, плотности и функциональности, неизменно предлагая инновации, необходимые для соответствия постоянно развивающемуся цифровому ландшафту. Недавний анонс Tomahawk 6, флагманского коммутационного чипа шестого поколения, знаменует собой очередной значительный шаг вперёд, преодолевая рубеж в 100 Тбит/с и закрепляя за ним роль основополагающего элемента для построения глобальной инфраструктуры искусственного интеллекта. (Для справки: 100 Тбит/с — это на 5 порядков пропускная способность Ethernet первого поколения, впервые представленного Intel, DEC и Бобом Меткалфом в Xerox примерно в 1978 году.)

Но это не должно никого удивлять, ведь за последнее десятилетие выпуски коммутационных чипов Broadcom продемонстрировали неуклонное движение к повышению производительности и эффективности в рамках программно-определяемого пакета. Broadcom доказала, что коммутационные чипы не обязательно должны быть фиксированными по функциям, требующими дорогостоящих и трудоёмких новых ASIC-микросхем для устранения ошибок или проблем с производительностью. От ранних чипов с многотерабитной пропускной способностью до последующих поколений, интегрирующих передовую телеметрию и программируемые конвейеры, каждый продукт основывался на предыдущем, достигая кульминации в мощном чипе ‘Tomahawk 6’. Этот прогресс был связан не только с чистой скоростью; речь шла о создании всё более интеллектуальных и адаптивных сетевых чипов, способных справляться с самыми требовательными рабочими нагрузками. В эпоху искусственного интеллекта поддержка когнитивной маршрутизации и ультра-Ethernet (читай: “без потерь”) в новейшем Tomahawk сразу же становится эталоном, с которым будут сравниваться все остальные решения. Поэтому неудивительно, что группа Dell'Oro недавно сообщила, что спрос на Ethernet для внутренних сетей ИИ теперь ПРЕВЫШАЕТ все другие технологии.

Tomahawk 6: Более пристальный взгляд на прорывные инновации

Как я уже говорил выше, дело не только в скорости. Tomahawk 6 — это не просто новый, более быстрый чип; это существенная переработка подхода к обучению и логическому выводу ИИ, призванная удовлетворить беспрецедентные требования.

Вот пять наиболее впечатляющих новых или значительно улучшенных функций по сравнению с предшественниками:

1. Беспрецедентная пропускная способность и плотность портов:

Tomahawk 6 побивает предыдущие рекорды пропускной способности, предлагая впечатляющую коммутационную способность в 102,4 Тбит/с. Это означает возможность поддержки до 64 портов 1,6 Тбит/с, 128 портов 800 Гбит/с, 256 портов 400 Гбит/с или 512 портов 200 Гбит/с на одном чипе! Такой значительный прирост пропускной способности критически важен для построения кластеров искусственного интеллекта, где сотни или тысячи графических процессоров должны взаимодействовать с минимальной задержкой и максимальной пропускной способностью для обмена обширными наборами данных и параметрами моделей. Такая плотность позволяет создавать невероятно плоские и эффективные топологии сетей.

2. Сверхнизкая задержка для рабочих нагрузок ИИ/МО:

Одной из важнейших задач при крупномасштабном обучении ИИ является минимизация задержки при обмене данными между графическими процессорами. Tomahawk 6 представляет собой значительное архитектурное усовершенствование, специально разработанное для снижения задержки в сети. Это достигается за счёт оптимизированной обработки пакетов, уменьшения задержек буферизации, когнитивной маршрутизации и передовых алгоритмов управления трафиком. В ИИ сокращение задержки даже на микросекунды может привести к значительному ускорению обучения модели, снижению затрат и повышению общей эффективности кластера.

3. Улучшенное управление перегрузками и потоками:

Рабочие нагрузки ИИ характеризуются “слоновьими потоками” — непрерывными массивными передачами данных между вычислительными узлами. Эффективное управление этими потоками без создания узких мест имеет первостепенное значение. Tomahawk 6 включает в себя более сложные механизмы управления перегрузкой, включая расширенные возможности ECN (явного уведомления о перегрузке) и интеллектуальное управление буферами. Эти функции гарантируют плавную и эффективную передачу данных даже при пиковой нагрузке, предотвращая снижение производительности в критически важных приложениях ИИ. По мере того, как Ultra-Ethernet и его расширенные возможности управления потоками набирают популярность, Tomahawk 6 также обеспечивает его полную поддержку.

4. Расширенная внутриполосная сетевая телеметрия (INT) и видимость:

По мере роста сложности сетей контроль за их основными характеристиками становится критически важным для устранения неполадок и оптимизации. Tomahawk 6 значительно расширяет и без того обширные возможности телеметрии Broadcom, доступные в поколениях Tomahawk. Tomahawk 6 обеспечивает более глубокую и детализированную внутриполосную телеметрию сети, позволяя операторам отслеживать состояние сети, выявлять микровсплески и точно определять аномалии производительности в режиме реального времени. Такой уровень контроля бесценен для поддержания работоспособности и производительности высоконагруженной инфраструктуры искусственного интеллекта, где даже незначительные проблемы могут остановить дорогостоящие задания по обучению.

5. Большая программируемость и гибкость набора функций:

Tomahawk 6 продолжает линию Broadcom на программируемые конвейеры, предоставляя операторам сетей повышенную гибкость для настройки обработки пакетов и внедрения инновационных сетевых функций. Эта программируемость критически важна в быстро меняющейся среде искусственного интеллекта, где могут потребоваться новые протоколы и оптимизированные пути передачи данных. Более того, она позволяет гипермасштабируемым компаниям дифференцировать свои сети и интегрировать собственные оптимизации, обеспечивая им конкурентное преимущество.

Незаменимая роль Tomahawk 6 в революции искусственного интеллекта

Глобальный ажиотаж вокруг создания инфраструктуры искусственного интеллекта не имеет аналогов. Он привёл к появлению предложений о строительстве центров обработки данных размером с Манхэттен, способствовал возрождению некогда заброшенной ядерной энергетики, которая стала рассматриваться как ключевой элемент, стал катализатором финансирования более 1000 стартапов в области искусственного интеллекта и сделал более миллиона миллионеров.

Для искусственного интеллекта требуются сети, способные не только выдерживать огромную пропускную способность, но и работать с беспрецедентно низкой задержкой, высокой надёжностью и точным управлением. Именно здесь Tomahawk 6 действительно раскрывает свои возможности.

- Масштабирование сверхскоплений ИИ: Обучение самых современных моделей ИИ требует распределения нагрузки между тысячами графических процессоров. Пропускная способность Tomahawk 6 в 1,6 Тбит/с и высокая плотность портов позволяют создавать исключительно плоские топологии сетей с высоким радиусом счисления, которые минимизируют количество переходов и максимизируют пропускную способность между этими распределёнными вычислительными ресурсами. Это крайне важно для предотвращения узких мест в сети, которые могут стать ограничивающим фактором при разработке моделей ИИ.

- Обеспечение дезагрегированной инфраструктуры: По мере того, как рабочие нагрузки ИИ становятся всё более разнообразными и динамичными, растёт потребность в дезагрегированных вычислительных ресурсах, ресурсах хранения и ускорения. Сети на базе Tomahawk 6 обеспечивают высокоскоростные соединения, необходимые для функционирования этих компонентов как единой высокопроизводительной системы, что позволяет гибко распределять ресурсы и максимально эффективно их использовать. По сути, Tomahawk 6 позволяет всем этим ресурсам быть доступными «со скоростью передачи данных».

- Перспективы инноваций в области искусственного интеллекта: Программно-определяемая природа и расширенный набор функций Tomahawk 6 обеспечивают огромный потенциал для будущего, чего никогда не было во времена микросхем ASIC с фиксированным набором функций. По мере развития алгоритмов искусственного интеллекта и сетевых протоколов базовая сетевая инфраструктура, построенная на Tomahawk 6, будет адаптивной, обеспечивая долговечность и защиту значительных инвестиций в инфраструктуру.

Итак, суммируя всё это, ИТ-специалисты, выросшие на Ethernet, оказываются на довольно знакомой территории, но на уровне, который раньше невозможно было себе представить. Прошли времена индивидуальных разработок микросхем ASIC отдельными производителями сетевых устройств, поскольку теперь программное обеспечение определяет высокопроизводительные платформы для коммерческих сетей. Broadcom Tomahawk 6 демонстрирует высочайший уровень возможностей. Это больше, чем просто очередной высокоскоростной сетевой чип; это критически важный инструмент для следующей волны инноваций в области ИИ. Его беспрецедентная пропускная способность, сверхнизкая задержка и расширенные функции управления — именно то, что нужно растущей индустрии ИИ для открытия новых возможностей и масштабирования в соответствии с требованиями мира, управляемого данными. По мере того, как революция ИИ продолжается, семейства коммутаторов Broadcom (включая новейшую модель Tomahawk 6) будут играть незаменимую роль в обеспечении работы интеллекта, формирующего наше будущее. Будучи долгосрочным поставщиком более половины мировых решений Whitebox, Accton будет лидировать, продолжая поставлять открытую программно-определяемую инфраструктуру высочайшего качества на базе чипсетов Broadcom, необходимую сообществам гипермасштабных вычислений, предприятий и поставщиков услуг.

Если у вас есть какие-либо комментарии, запросы или вопросы относительно наших продуктов и услуг, пожалуйста, заполните следующую форму.

ПОСЛЕДНИЕ БЛОГИ

11 ноября, 2025